Optimization Problem#

Wikipedia: https://en.wikipedia.org/wiki/Optimization_problem

Given a target (loss) function and a set of parameters. Find the parameters set (subjects to contraints) to minimize the function.

Curve fitting: LsqFit.jl

General optimization problems: Optim.jl

Use with ModelingToolkit: Optimization.jl

Curve fitting using LsqFit#

LsqFit.jl package is a small library that provides basic least-squares fitting in pure Julia.

using LsqFit

@. model(x, p) = p[1] * exp(-x * p[2])

model (generic function with 1 method)

Generate data

xdata = range(0, stop=10, length=20)

ydata = model(xdata, [1.0 2.0]) + 0.01 * randn(length(xdata))

20-element Vector{Float64}:

0.9957866597445789

0.3464264866352952

0.13561123237002964

0.026014744473579387

0.03947407617330938

0.015256197442177558

0.00906290514493749

-0.01127912188374244

-0.0314191234790213

-0.0190368541713084

-0.00045810336913030853

0.0046178872627228714

0.016795987367441412

0.003976474165881812

-0.0098973853940254

0.012550066915817644

-0.0062037266091590545

-0.010191631823451232

0.0015994771785855528

0.010041606965980212

Initial guess

p0 = [0.5, 0.5]

2-element Vector{Float64}:

0.5

0.5

Fit the model

fit = curve_fit(model, xdata, ydata, p0; autodiff=:forwarddiff)

LsqFit.LsqFitResult{Vector{Float64}, Vector{Float64}, Matrix{Float64}, Vector{Float64}, Vector{LsqFit.LMState{LsqFit.LevenbergMarquardt}}}([0.9947346338943851, 1.972778774924096], [-0.0010520258501938162, 0.005763717952893543, -0.010916729955589927, 0.018133896601108136, -0.023843054187564803, -0.009721964850386292, -0.007103485574804648, 0.011972863064319909, 0.03166474561782458, 0.019123817777851226, 0.0004888932193440657, -0.0046069859798314045, -0.01679212771993242, -0.003975107640579974, 0.0098978692183633, -0.012549895615667089, 0.006203787258736353, 0.0101916532967028, -0.0015994695758856916, -0.010041604274210641], [1.0 0.0; 0.3540544307876005 -0.1853632655727309; … ; 7.642942752813025e-9 -7.202557763142828e-8; 2.7060177458894275e-9 -2.691769571769029e-8], true, Iter Function value Gradient norm

------ -------------- --------------

, Float64[])

The parameters

coef(fit)

2-element Vector{Float64}:

0.9947346338943851

1.972778774924096

Curve fitting using Optimization.jl#

using Optimization

using OptimizationOptimJL

@. model(x, p) = p[1] * exp(-x * p[2])

model (generic function with 1 method)

Generate data

xdata = range(0, stop=10, length=20)

ydata = model(xdata, [1.0 2.0]) + 0.01 * randn(length(xdata))

function lossl2(p, data)

x, y = data

y_pred = model(x, p)

return sum(abs2, y_pred .- y)

end

p0 = [0.5, 0.5]

data = [xdata, ydata]

prob = OptimizationProblem(lossl2, p0, data)

res = solve(prob, Optim.NelderMead())

retcode: Success

u: 2-element Vector{Float64}:

1.0247262200979093

2.073554941739439

2D Rosenbrock Function#

From: https://docs.sciml.ai/ModelingToolkit/stable/tutorials/optimization/ Wikipedia: https://en.wikipedia.org/wiki/Rosenbrock_function

Find \((x, y)\) that minimizes the loss function \((a - x)^2 + b(y - x^2)^2\)

using ModelingToolkit

using Optimization

using OptimizationOptimJL

@variables begin

x, [bounds = (-2.0, 2.0)]

y, [bounds = (-1.0, 3.0)]

end

@parameters a b

Define the target (loss) function The optimization algorithm will try to minimize its value

loss = (a - x)^2 + b * (y - x^2)^2

Build the OptimizationSystem

@mtkbuild sys = OptimizationSystem(loss, [x, y], [a, b])

Initial guess

u0 = [x => 1.0, y => 2.0]

2-element Vector{Pair{Symbolics.Num, Float64}}:

x => 1.0

y => 2.0

parameters

p = [a => 1.0, b => 100.0]

2-element Vector{Pair{Symbolics.Num, Float64}}:

a => 1.0

b => 100.0

ModelingToolkit can generate gradient and Hessian to solve the problem more efficiently.

prob = OptimizationProblem(sys, u0, p, grad=true, hess=true)

OptimizationProblem. In-place: true

u0: 2-element Vector{Float64}:

1.0

2.0

Solve the problem The true solution is (1.0, 1.0)

u_opt = solve(prob, GradientDescent())

retcode: Success

u: 2-element Vector{Float64}:

1.0000000135463598

1.0000000271355158

Adding constraints#

OptimizationSystem(..., constraints = cons)

@variables begin

x, [bounds = (-2.0, 2.0)]

y, [bounds = (-1.0, 3.0)]

end

@parameters a = 1 b = 100

loss = (a - x)^2 + b * (y - x^2)^2

Constraints are define using ≲ (\lesssim) or ≳ (\gtrsim)

cons = [

x^2 + y^2 ≲ 1,

]

@mtkbuild sys = OptimizationSystem(loss, [x, y], [a, b], constraints=cons)

u0 = [x => 0.14, y => 0.14]

prob = OptimizationProblem(sys, u0, grad=true, hess=true, cons_j=true, cons_h=true)

OptimizationProblem. In-place: true

u0: 2-element Vector{Float64}:

0.14

0.14

Use interior point Newton method for constrained optimization

solve(prob, IPNewton())

┌ Warning: The selected optimization algorithm requires second order derivatives, but `SecondOrder` ADtype was not provided.

│ So a `SecondOrder` with SciMLBase.NoAD() for both inner and outer will be created, this can be suboptimal and not work in some cases so

│ an explicit `SecondOrder` ADtype is recommended.

└ @ OptimizationBase ~/.julia/packages/OptimizationBase/gvXsf/src/cache.jl:49

retcode: Success

u: 2-element Vector{Float64}:

0.7864151541684254

0.6176983125233897

Parameter estimation#

From: https://docs.sciml.ai/DiffEqParamEstim/stable/getting_started/

DiffEqParamEstim.jl is not installed with DifferentialEquations.jl. You need to install it manually:

using Pkg

Pkg.add("DiffEqParamEstim")

using DiffEqParamEstim

The key function is DiffEqParamEstim.build_loss_objective(), which builds a loss (objective) function for the problem against the data. Then we can use optimization packages to solve the problem.

Estimate a single parameter from the data and the ODE model#

Let’s optimize the parameters of the Lotka-Volterra equation.

using OrdinaryDiffEq

using Plots

using DiffEqParamEstim

using ForwardDiff

using Optimization

using OptimizationOptimJL

Example model

function lotka_volterra!(du, u, p, t)

du[1] = dx = p[1] * u[1] - u[1] * u[2]

du[2] = dy = -3 * u[2] + u[1] * u[2]

end

u0 = [1.0; 1.0]

tspan = (0.0, 10.0)

p = [1.5] ## The true parameter value

prob = ODEProblem(lotka_volterra!, u0, tspan, p)

ODEProblem with uType Vector{Float64} and tType Float64. In-place: true

timespan: (0.0, 10.0)

u0: 2-element Vector{Float64}:

1.0

1.0

True solution

sol = solve(prob, Tsit5())

retcode: Success

Interpolation: specialized 4th order "free" interpolation

t: 34-element Vector{Float64}:

0.0

0.0776084743154256

0.2326451370670694

0.42911851563726466

0.679082199936808

0.9444046279774128

1.2674601918628516

1.61929140093895

1.9869755481702074

2.2640903679981617

⋮

7.5848624442719235

7.978067891667038

8.483164641366145

8.719247691882519

8.949206449510513

9.200184762926114

9.438028551201125

9.711807820573478

10.0

u: 34-element Vector{Vector{Float64}}:

[1.0, 1.0]

[1.0454942346944578, 0.8576684823217128]

[1.1758715885890039, 0.6394595702308831]

[1.419680958026516, 0.45699626144050703]

[1.8767193976262215, 0.3247334288460738]

[2.5882501035146133, 0.26336255403957304]

[3.860709084797009, 0.27944581878759106]

[5.750813064347339, 0.5220073551361045]

[6.814978696356636, 1.917783405671627]

[4.392997771045279, 4.194671543390719]

⋮

[2.6142510825026886, 0.2641695435004172]

[4.241070648057757, 0.30512326533052475]

[6.79112182569163, 1.13452538354883]

[6.265374940295053, 2.7416885955953294]

[3.7807688120520893, 4.431164521488331]

[1.8164214705302744, 4.064057991958618]

[1.146502825635759, 2.791173034823897]

[0.955798652853089, 1.623563316340748]

[1.0337581330572414, 0.9063703732075853]

Create a sample dataset with some noise.

ts = range(tspan[begin], tspan[end], 200)

data = [sol.(ts, idxs=1) sol.(ts, idxs=2)] .* (1 .+ 0.03 .* randn(length(ts), 2))

200×2 Matrix{Float64}:

1.02276 1.03065

1.01959 0.949974

1.06525 0.823818

1.09077 0.720844

1.177 0.648037

1.16114 0.626596

1.22184 0.562003

1.34569 0.494766

1.43041 0.483475

1.40953 0.437011

⋮

0.979869 2.04579

0.936601 1.8051

0.933202 1.66485

0.937123 1.49034

0.959596 1.33604

0.931077 1.185

0.944259 1.11327

1.00109 1.04857

1.09656 0.94945

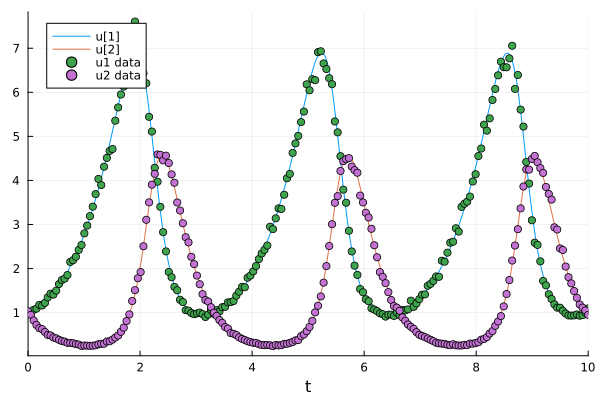

Plotting the sample dataset and the true solution.

plot(sol)

scatter!(ts, data, label=["u1 data" "u2 data"])

DiffEqParamEstim.build_loss_objective() builds a loss function for the ODE problem for the data.

We will minimize the mean squared error using L2Loss().

Note that

the data should be transposed.

Uses

AutoForwardDiff()as the automatic differentiation (AD) method since the number of parameters plus states is small (<100). For larger problems, one can useOptimization.AutoZygote().

alg = Tsit5()

cost_function = build_loss_objective(

prob, alg,

L2Loss(collect(ts), transpose(data)),

Optimization.AutoForwardDiff(),

maxiters=10000, verbose=false

)

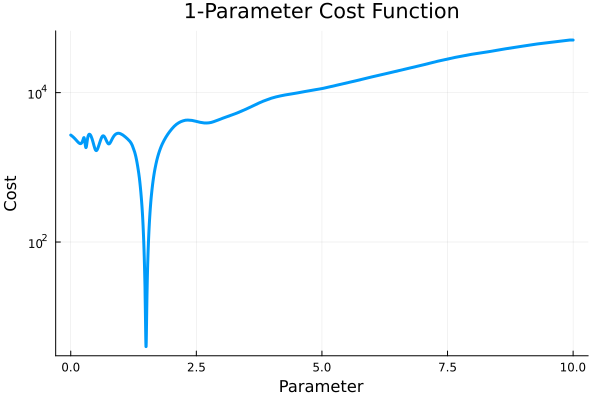

plot(

cost_function, 0.0, 10.0,

linewidth=3, label=false, yscale=:log10,

xaxis="Parameter", yaxis="Cost", title="1-Parameter Cost Function"

)

There is a dip (minimum) in the cost function at the true parameter value (1.5). We can use an optimizer e.g., Optimization.jl, to find the parameter value that minimizes the cost. (1.5 in this case)

optprob = Optimization.OptimizationProblem(cost_function, [1.42])

optsol = solve(optprob, BFGS())

retcode: Success

u: 1-element Vector{Float64}:

1.4991937372651487

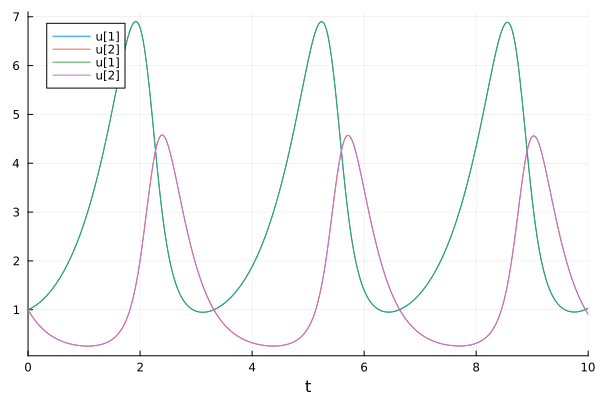

The fitting result:

newprob = remake(prob, p=optsol.u)

newsol = solve(newprob, Tsit5())

plot(sol)

plot!(newsol)

Estimate multiple parameters#

Let’s use the Lotka-Volterra (Fox-rabbit) equations with all 4 parameters free.

function f2(du, u, p, t)

du[1] = dx = p[1] * u[1] - p[2] * u[1] * u[2]

du[2] = dy = -p[3] * u[2] + p[4] * u[1] * u[2]

end

u0 = [1.0; 1.0]

tspan = (0.0, 10.0)

p = [1.5, 1.0, 3.0, 1.0] ## True parameters

alg = Tsit5()

prob = ODEProblem(f2, u0, tspan, p)

sol = solve(prob, alg)

retcode: Success

Interpolation: specialized 4th order "free" interpolation

t: 34-element Vector{Float64}:

0.0

0.0776084743154256

0.2326451370670694

0.42911851563726466

0.679082199936808

0.9444046279774128

1.2674601918628516

1.61929140093895

1.9869755481702074

2.2640903679981617

⋮

7.5848624442719235

7.978067891667038

8.483164641366145

8.719247691882519

8.949206449510513

9.200184762926114

9.438028551201125

9.711807820573478

10.0

u: 34-element Vector{Vector{Float64}}:

[1.0, 1.0]

[1.0454942346944578, 0.8576684823217128]

[1.1758715885890039, 0.6394595702308831]

[1.419680958026516, 0.45699626144050703]

[1.8767193976262215, 0.3247334288460738]

[2.5882501035146133, 0.26336255403957304]

[3.860709084797009, 0.27944581878759106]

[5.750813064347339, 0.5220073551361045]

[6.814978696356636, 1.917783405671627]

[4.392997771045279, 4.194671543390719]

⋮

[2.6142510825026886, 0.2641695435004172]

[4.241070648057757, 0.30512326533052475]

[6.79112182569163, 1.13452538354883]

[6.265374940295053, 2.7416885955953294]

[3.7807688120520893, 4.431164521488331]

[1.8164214705302744, 4.064057991958618]

[1.146502825635759, 2.791173034823897]

[0.955798652853089, 1.623563316340748]

[1.0337581330572414, 0.9063703732075853]

ts = range(tspan[begin], tspan[end], 200)

data = [sol.(ts, idxs=1) sol.(ts, idxs=2)] .* (1 .+ 0.01 .* randn(length(ts), 2))

200×2 Matrix{Float64}:

0.997604 0.992718

1.02826 0.906709

1.06027 0.815675

1.10136 0.736111

1.16057 0.674389

1.18828 0.616884

1.24445 0.562127

1.30033 0.515307

1.39447 0.487187

1.43716 0.449591

⋮

1.00371 2.01181

0.974469 1.84733

0.960664 1.67316

0.951381 1.52676

0.958422 1.37988

0.977145 1.21615

0.976921 1.09713

1.01513 1.00545

1.03349 0.904746

Then we can find multiple parameters at once using the same steps. True parameters are [1.5, 1.0, 3.0, 1.0].

cost_function = build_loss_objective(

prob, alg, L2Loss(collect(ts), transpose(data)),

Optimization.AutoForwardDiff(),

maxiters=10000, verbose=false

)

optprob = Optimization.OptimizationProblem(cost_function, [1.3, 0.8, 2.8, 1.2])

result_bfgs = solve(optprob, LBFGS())

retcode: Failure

u: 4-element Vector{Float64}:

1.5042738031309957

1.0023887274383827

2.988429282092708

0.9965586556454187

This notebook was generated using Literate.jl.